Finding the right tech for your business

Your tech decisions should be rooted in the context of your business. That’s how you get results.

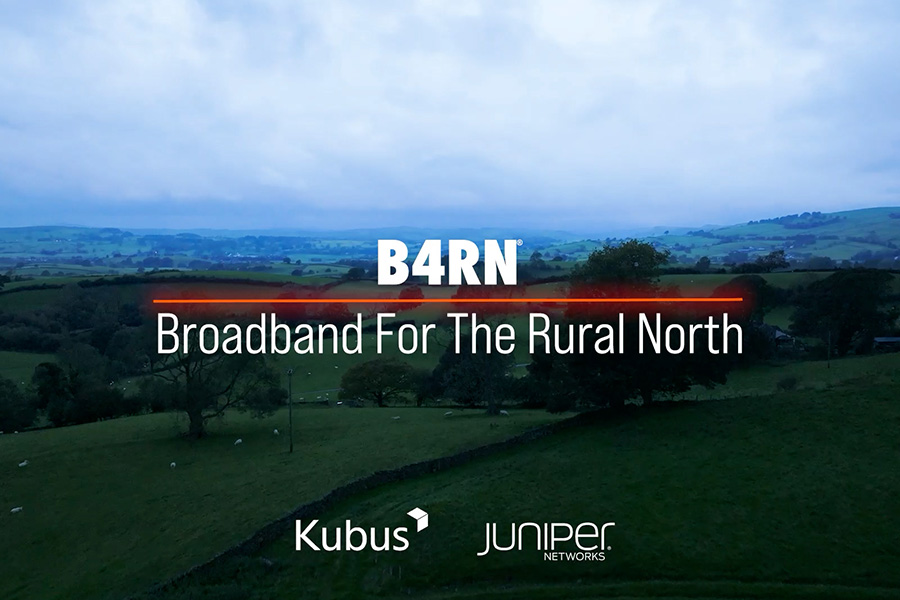

We listen to your challenges. We observe the way you work. We visualise your strategic ambitions. Then we design, deliver and implement technology that will drive meaningful outcomes for your business. So you can become even better at what you do best. It’s what we have been doing for over two decades – for everyone from startups to giants of the FTSE100. We can do the same for you.

Trusted by government and

multi-nationals locally and abroad.

Want to know more about how Kubus can help your organisation?

Something more specific?

Our expertise stretches across all realms of IT – from cloud networking to security. We can even offer board-level input to advocate for your organisation’s ongoing tech strategy. If you need assistance with a specific project, browse our services or pick up the phone. We really can help you to drive positive

business outcomes with anything IT related.

We won’t keep you waiting…

It’s frustrating to be slowed down. Especially when you are on a deadline. It’s why we measure our response times in minutes and hours, rather than days and weeks. Thanks to our breadth of in-house expertise, we can be incredibly responsive to clients old and new. And with global distribution networks and logistics experience, we can ship to 99% of countries quickly – taking care of all customs documentation too. Ready when you are.

Access world-leading technologies

Over two decades we have developed longstanding relationships with world-leading tech providers. In fact we are an award-winning tech partner. It means we can provide you with access to cutting edge technology at incredibly cost-effective prices – often before the technology is available elsewhere. And because we are vendor agnostic, there’s no bias towards specific brands. We cherrypick the tech that makes most sense for your business. It’s that simple.

Round-the-clock support 24/7/365

If your tech estate goes down, your profits do too. We give you round-the-clock access to a fleet of highly experienced IT engineers. And because we keep our support function in house – rather than outsourcing to a third party – we have total responsibility for resolving our client support tickets. So you can be sure that help is always near if you need it. Confidence in your tech, confidence in your business.

Deployments that are right first time

Installing new tech infrastructure can sometimes feel daunting – and mistakes are costly. We can set up and test your new infrastructure at our facilities, getting everything installed and configured for your business ahead of time. Then we will box it up neatly – with all assets labelled down to the last cable – and ship your pre-configured equipment with clear instructions on setting up and going live. And if you want an engineer there to assist you, just say the word. No more downtime and disruption.

Latest offers

Scroll through our ever-popular latest offers or click below to view all.